Web scraping has become an essential tool for businesses, researchers, and developers looking to extract valuable data from the internet. However, scraping JavaScript-heavy websites presents unique challenges. Unlike static HTML pages, where data can be extracted directly from the source code, JavaScript-heavy websites load content dynamically. This article explores the best methods for javascript web scraping such websites, along with how services like PromptCloud can help with large-scale data extraction.

Source: crawlbase

Top Challenges in Scraping JavaScript-Loaded Web Pages?

JavaScript-heavy websites pose several difficulties for traditional web scrapers:

- Dynamic Content Loading: Many modern websites use JavaScript frameworks like React, Angular, or Vue.js to load content dynamically. This means that an initial page request may not contain all the data needed.

- AJAX Calls: Websites often use AJAX requests to fetch data asynchronously from APIs, making it harder to retrieve information through static scraping methods.

- Infinite Scrolling and Pagination: Some websites load content continuously as users scroll, complicating the data extraction process.

- CAPTCHAs and Anti-Scraping Mechanisms: Websites frequently implement measures to detect and block scrapers, including CAPTCHAs, IP blocking, and browser fingerprinting.

- Session-Based Authentication: Many websites require authentication and maintain session states, making it challenging to scrape restricted content.

To successfully extract data from JavaScript-heavy websites, developers must use advanced techniques tailored to these challenges.

Best Techniques to Scrape JavaScript-Heavy Websites Efficiently?

1. Headless Browsers

Headless browsers like Puppeteer (for Chrome) and Playwright allow developers to render JavaScript-heavy pages and extract dynamic content. These tools enable full browser automation, making them highly effective for scraping websites that rely heavily on JavaScript execution.

Advantages:

- Can render full web pages, including dynamically loaded content

- Supports automation of user interactions, such as clicking buttons and filling forms

- Can bypass simple anti-scraping mechanisms by mimicking real users

Disadvantages:

- Consumes significant system resources

- Slower compared to direct HTTP requests

- May require frequent maintenance due to website changes

2. Using Browser Extensions

Some browser extensions, like Web Scraper or Scraper API, provide an easy way to extract data without writing extensive code. These tools work directly within the browser and allow users to select elements for extraction.

Advantages:

- No coding required

- User-friendly interface

- Ideal for small-scale scraping tasks

Disadvantages:

- Limited automation capabilities

- Not suitable for large-scale scraping

3. Network Interception and API Extraction

Many JavaScript-heavy websites rely on backend APIs to fetch data. By inspecting network requests in the browser’s developer tools, developers can identify and directly access these API endpoints.

Advantages:

- Faster than rendering entire web pages

- Extracts clean JSON data instead of parsing HTML

- Reduces the risk of detection by anti-scraping mechanisms

Disadvantages:

- Requires understanding of network protocols

- Websites may employ encryption or token-based authentication

4. Proxy and Rotating IPs

Many websites implement anti-scraping measures that block repeated requests from the same IP address. Using proxy servers or rotating IPs helps evade these restrictions.

Advantages:

- Reduces the likelihood of getting blocked

- Allows scraping at scale across multiple regions

Disadvantages:

- Increases operational costs

- Some websites detect and block known proxy services

5. Scheduled Scraping with Serverless Computing

For large-scale scraping, serverless platforms like AWS Lambda or Google Cloud Functions can automate scraping tasks at scheduled intervals. This method ensures continuous data collection without the need for dedicated infrastructure.

Advantages:

- Scalable and cost-effective

- No need to maintain physical servers

- Suitable for scheduled data extraction

Disadvantages:

- Requires knowledge of cloud computing services

- Limited execution time for each function

6. Machine Learning for CAPTCHA Bypassing

Websites often use CAPTCHAs to deter automated scraping. Machine learning models can be trained to recognize and bypass simple CAPTCHAs, or CAPTCHA-solving services can be used to handle more complex challenges.

Advantages:

- Increases the efficiency of web scrapers

- Enables scraping of restricted content

Disadvantages:

- Ethical and legal concerns

- Adds additional complexity and cost

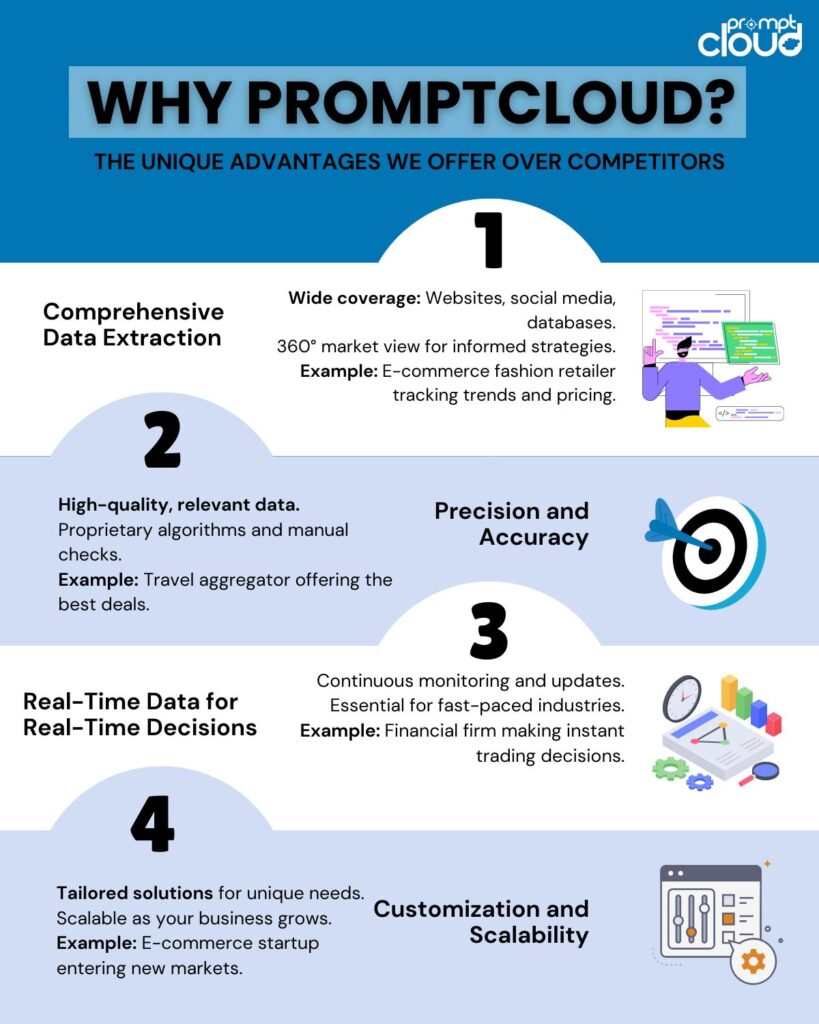

Why PromptCloud is Ideal for Large-Scale JavaScript Web Scraping?

For businesses and developers looking to scale their Javascript web scraping operations, managing infrastructure, rotating proxies, handling CAPTCHAs, and ensuring high data accuracy can become overwhelming. This is where PromptCloud comes into play.

PromptCloud is a cloud-based javascript web scraping service that specializes in large-scale data extraction. It offers a fully managed solution that takes care of the complexities associated with javascript web scraping. Here’s how PromptCloud helps:

- Handling JavaScript-Heavy Websites: PromptCloud uses advanced headless browsers and dynamic rendering techniques to extract data from modern web applications seamlessly.

- Data Extraction at Scale: Whether scraping thousands or millions of pages, PromptCloud provides scalable solutions with optimized infrastructure.

- IP Rotation and Anti-Scraping Measures: With built-in proxy management, PromptCloud ensures that scrapers remain undetected and avoid IP bans.

- Structured Data Delivery: PromptCloud provides clean, structured data in various formats (JSON, CSV, XML) tailored to business needs.

- Automated Scheduling: The platform allows users to schedule scraping jobs at predefined intervals, ensuring continuous data flow without manual intervention.

- Custom Scraping Solutions: Unlike generic scraping tools, PromptCloud offers tailored solutions based on specific business requirements, making it ideal for enterprises needing high-quality data.

Conclusion

Scraping JavaScript-heavy websites requires advanced techniques due to dynamic content loading, AJAX calls, and anti-scraping mechanisms. Headless browsers, API extraction, proxy management, and scheduled scraping can help developers extract data efficiently. However, for large-scale operations, managing infrastructure and compliance becomes challenging.

This is where PromptCloud proves invaluable by offering scalable, automated, and legally compliant data extraction solutions. Whether you’re a business looking for market intelligence or a developer working on a data-driven project, PromptCloud provides the tools needed to collect high-quality web data without the hassle.By choosing the right scraping methods and leveraging professional services like PromptCloud, businesses and developers can harness the full potential of web data without getting bogged down by technical challenges. For custom web scraping solutions, get in touch with us at sales@promptcloud.com