Over the years, we’ve been asked by a lot of users if it’s easy to get SerpApi data into Google BigQuery. The answer is an emphatic yes.

With just a little bit of code, you can start syncing search engine data from SerpApi for whatever analytics project you’re working on in BigQuery.

For those of you not familiar with BigQuery, here’s what it is in Google’s own words:

BigQuery is a fully managed, AI-ready data analytics platform that helps you maximize value from your data and is designed to be multi-engine, multi-format, and multi-cloud.

In this tutorial, we’ll go over how to create a Python script to fetch data from SerpApi and send it to a BigQuery dataset. Specifically, we’ll retrieve restaurant data from the Google Maps API and grab a handful of reviews for each restaurant from the Google Maps Reviews API. You could follow these steps for any of our scraping APIs including the standard Google Search API.

To follow along, you’ll need a free SerpApi account. All free accounts get 100 free searches per month. You can sign up here: https://serpapi.com/users/sign_up

Google BigQuery Setup

BigQuery requires a Google account with Google Cloud activated on it. At time of writing, Google Cloud offers $300 in free credits and 90 days to try the platform. You do need to provide a credit card for account verification, but Google will only charge your card if you explicitly opt-in to paying for Google Cloud.

BigQuery also currently advertises 10 GiB of storage and 10 TiB of queries per month for free.

You can sign up here: https://console.cloud.google.com/bigquery

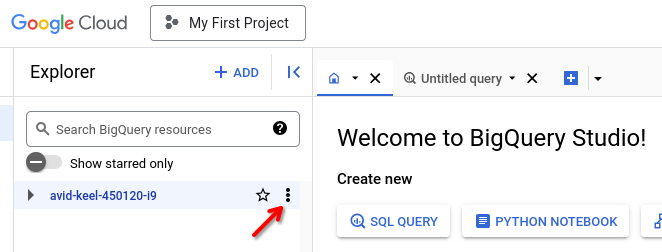

Once you complete the sign up and verification process, you’ll be taken to the BigQuery Studio. An initial project will be created automatically.

Under the Explorer sidebar, you’ll see a project ID made up of two random words, a number, and i9. For example, mine is avid-keel-450120-i9.Click the 3 vertical dots next to it to open the dropdown menu.

Then click “Create dataset”.

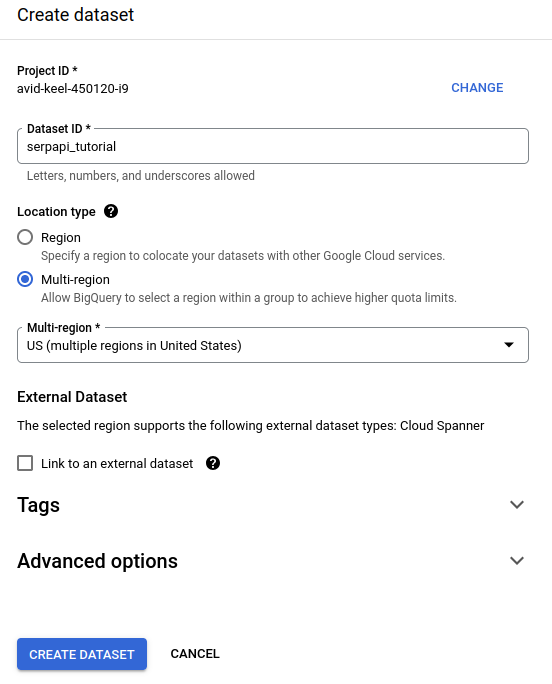

Give it a name in “Dataset ID” and select a region.

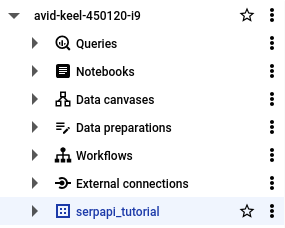

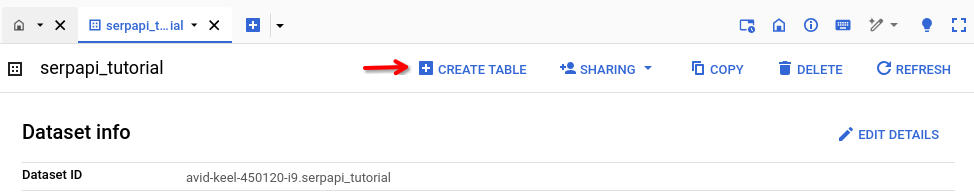

Select your new dataset from the dropdown under your Project ID.

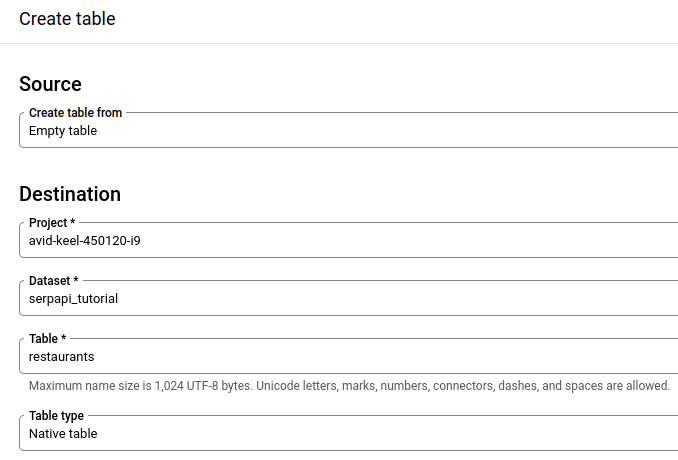

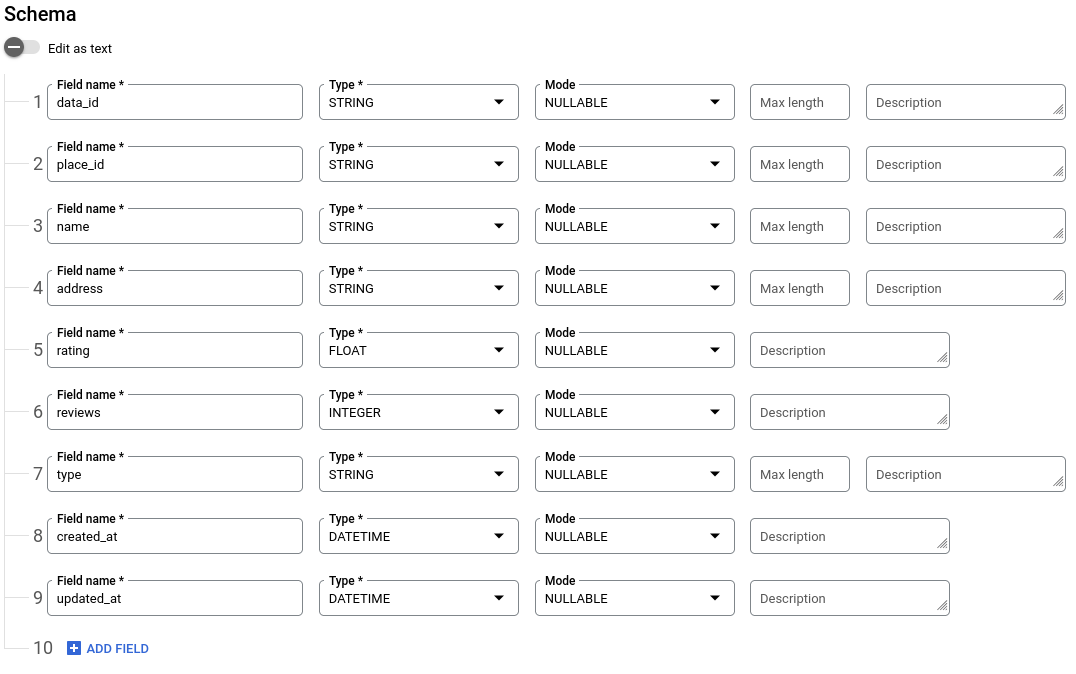

Then click “CREATE TABLE”.

You can leave everything default in the “Destination” section except you must give it a name in the “Table” form field. We’ll call it restaurants.

Add the following 9 fields to the schema. Be sure to set the “Type” correctly.

Hit “Create Table” when done.

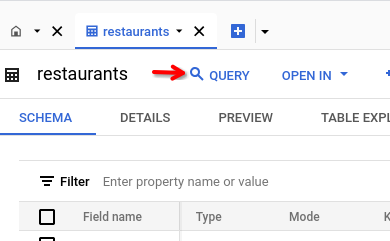

Navigate to the “Query” tab so we can try inserting some data into our new table.

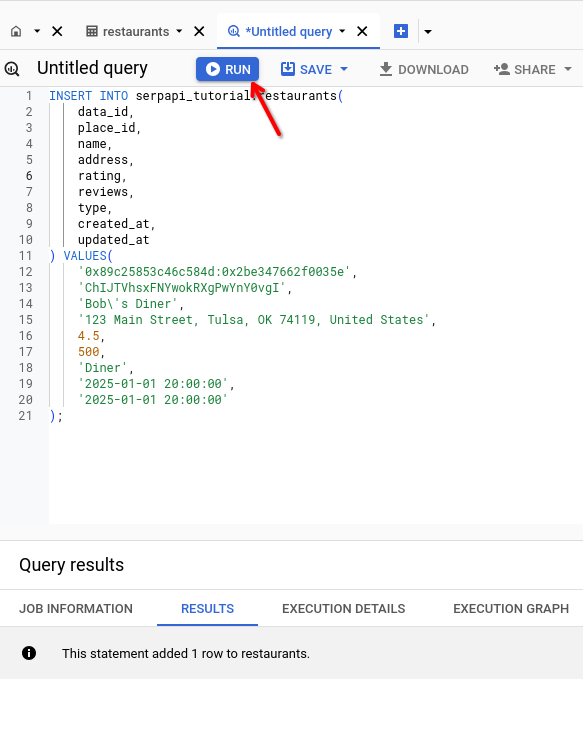

Copy and paste the following query into the query box.

INSERT INTO serpapi_tutorial.restaurants(

data_id,

place_id,

name,

address,

rating,

reviews,

type,

created_at,

updated_at

) VALUES(

'0x89c25853c46c584d:0x2be347662f0035e',

'ChIJTVhsxFNYwokRXgPwYnY0vgI',

'Bob's Diner',

'123 Main Street, Tulsa, OK 74119, United States',

4.5,

500,

'Diner',

'2025-01-01 20:00:00',

'2025-01-01 20:00:00'

);Execute the query by hitting the big blue “Run” button.

You should see “This statement added 1 row to restaurants.” under Query results.

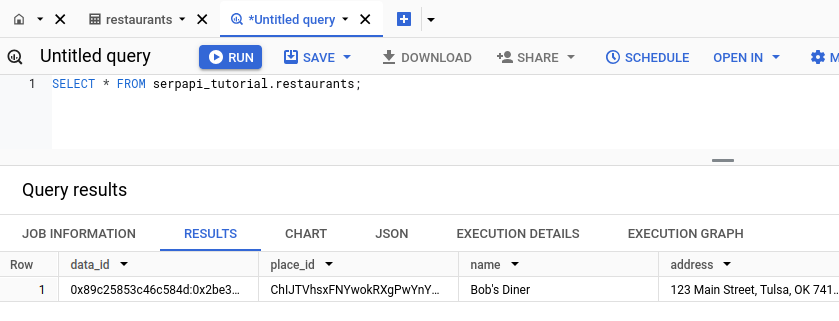

We can also validate the insert by running the following SELECT statement.

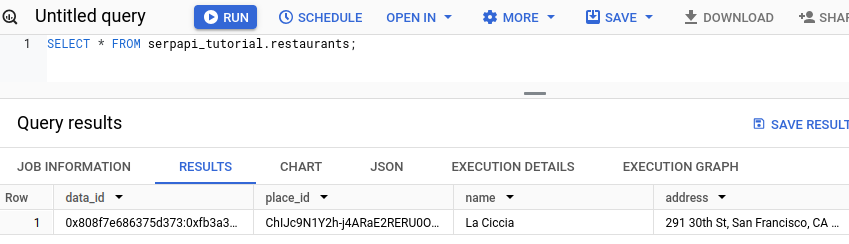

SELECT * FROM serpapi_tutorial.restaurants;Looks good!

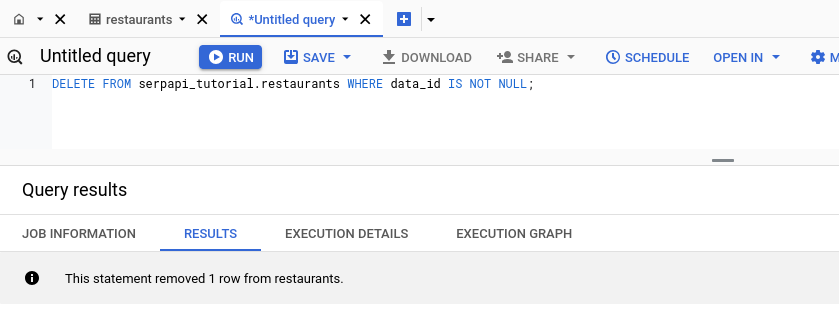

You can optionally delete this test record if you want using the DELETE statement below.

DELETE FROM serpapi_tutorial.restaurants WHERE data_id IS NOT NULL;

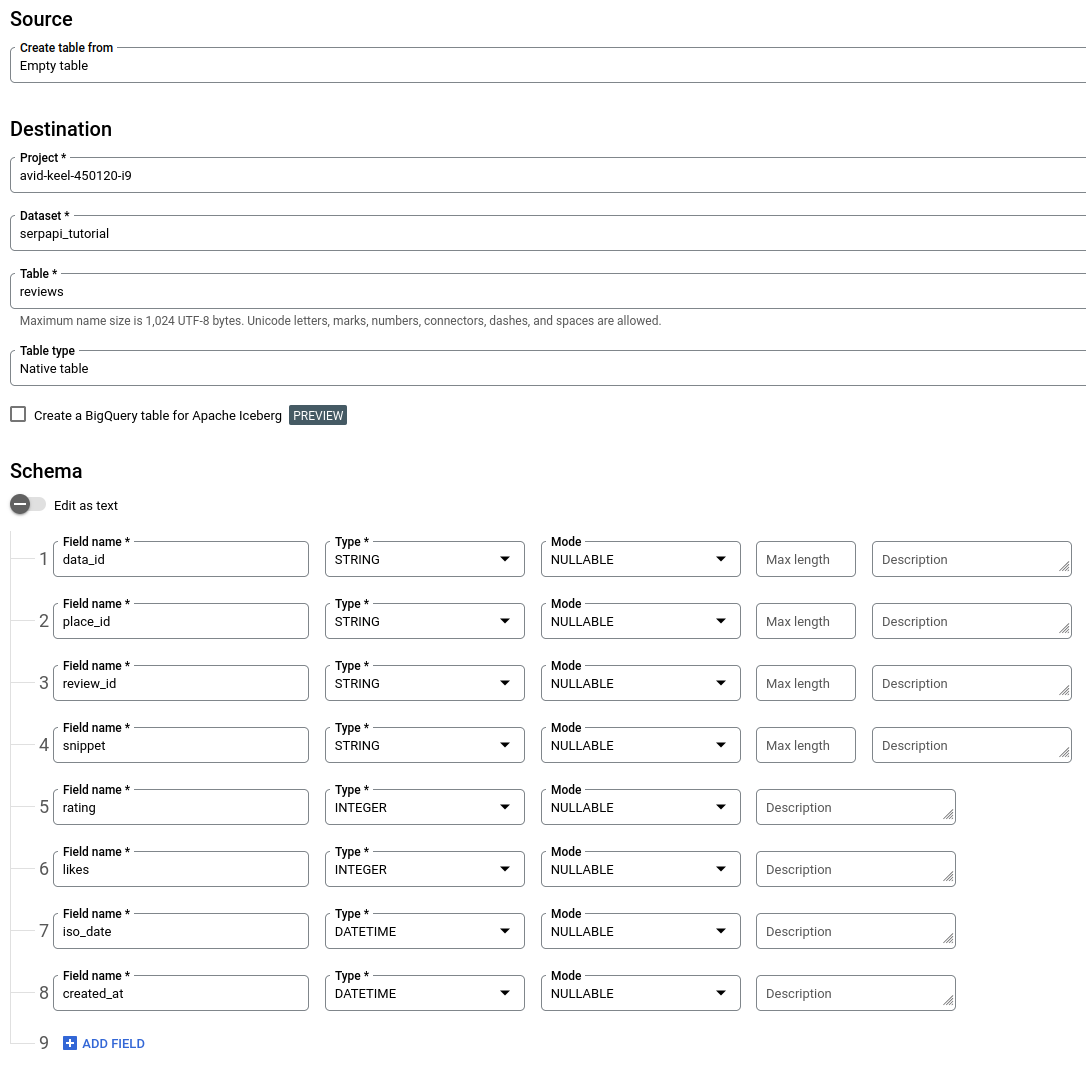

While we’re here and familiar with the process, let’s also create a table for reviews with the following settings. I won’t repeat each step this time.

Note that iso_date will store when the review was posted to Google. We’ll use created_at to track when we added it to BigQuery.

Local Development Setup

With the browser-based BigQuery setup done, we can move onto local environment setup. The easiest way to configure your local for Google Cloud APIs is to install the Google Cloud CLI. You can follow Google’s official instructions for that here: https://cloud.google.com/sdk/docs/install

Next, connect your local environment to Google Cloud by setting up ADC. Official instructions are here: https://cloud.google.com/docs/authentication/set-up-adc-local-dev-environment

Once you’ve completed the above, run gcloud init in your terminal to initialize Google Cloud CLI.

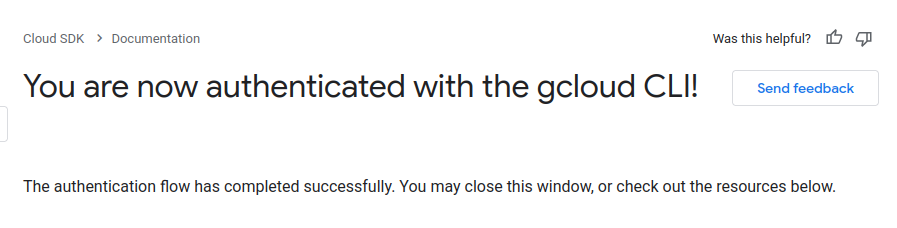

Then run gcloud auth application-default login to login. This will open a Google login page in your browser. Once you login and approve access, your credentials will be stored locally for development.

You will occasionally need to re-authenticate. You’ll receive an error with instructions to re-run the command when required.

This is optional but Google recommends setting a “quota project”. It’s worth doing just to prevent the warnings from appearing in your terminal. Run gcloud auth application-default set-quota-project your-project-id-here.

Google Cloud CLI will expect an environment variable of GCLOUD_PROJECT with your project ID. Set this by running export GCLOUD_PROJECT=your-project-id-here in your terminal.

Finally, we’ll install a couple Python libraries to make API calls to BigQuery and SerpApi. Run the following in your terminal:

pip install google-cloud-bigquery

pip install google-search-resultsInserting Data with the BigQuery API

Create a new Python file for our script.

We’ll start by adding from google.cloud import bigquery and initializing a BigQuery client. We’ll also create a constant to store our dataset name.

Populate the DATASET constant with yours. It should be your_project_id.your_table_name. For example avid-keel-450120-i9.serpapi_tutorial.restaurants in my case.

from google.cloud import bigquery

DATASET = "put_your_dataset_here" # for example "avid-keel-450120-i9.serpapi_tutorial"

CLIENT = bigquery.Client()Next, we’ll create an insert_into_bigquery method that accepts a BigQuery table_name and rows_to_insert array.

The method will fetch the table from our BigQuery instance and then insert all the rows of data from the rows_to_insert array. We’ll also include some basic logging.

def insert_into_bigquery(table_name, rows_to_insert):

table = CLIENT.get_table(f'{DATASET}.{table_name}')

try:

errors = CLIENT.insert_rows_json(table, rows_to_insert)

if errors == []:

print(f'Inserted rows successfully into {table}')

else:

print(f'Errors encountered while inserting rows')

print(errors)

except Exception as e:

print(f'Exception encountered')

print(str(e))We’ll test this by hard-coding some data and passing it to insert_into_bigquery from a main method.

def main():

restaurant_rows = []

restaurant_rows.append(

{

"data_id": '0x808f7e686375d373:0xfb3a341511914d68',

"place_id": 'ChIJc9N1Y2h-j4ARaE2RERU0Ovs',

"name": 'La Ciccia',

"address": '291 30th St, San Francisco, CA 94131 United States',

"rating": 4.6,

"reviews": 683,

"type": 'Italian restaurant',

"created_at": '2025-01-01 20:00:00',

"updated_at": '2025-01-01 20:00:00',

}

)

insert_into_bigquery("restaurants", restaurant_rows)

if __name__ == "__main__":

main()The full script should now look like this:

from google.cloud import bigquery

DATASET = "put_your_dataset_here"

CLIENT = bigquery.Client()

def insert_into_bigquery(table_name, rows_to_insert):

table = CLIENT.get_table(f'{DATASET}.{table_name}')

try:

errors = CLIENT.insert_rows_json(table, rows_to_insert)

if errors == []:

print(f'Inserted rows successfully into {table}')

else:

print(f'Errors encountered while inserting rows')

print(errors)

except Exception as e:

print(f'Exception encountered')

print(str(e))

def main():

restaurant_rows = []

restaurant_rows.append(

{

"data_id": '0x808f7e686375d373:0xfb3a341511914d68',

"place_id": 'ChIJc9N1Y2h-j4ARaE2RERU0Ovs',

"name": 'La Ciccia',

"address": '291 30th St, San Francisco, CA 94131 United States',

"rating": 4.6,

"reviews": 683,

"type": 'Italian restaurant',

"created_at": '2025-01-01 20:00:00',

"updated_at": '2025-01-01 20:00:00',

}

)

insert_into_bigquery("restaurants", restaurant_rows)

if __name__ == "__main__":

main()Give this a run and you should hopefully see Inserted rows successfully into your_table_id_here printed to your terminal.

We can also check BigQuery for the data.

SELECT * FROM serpapi_tutorial.restaurants;

Add Restaurants by Scraping Google Maps

In this step, we’ll connect to SerpApi to programmatically scrape Google Maps for restaurants.

Add from serpapi import GoogleSearch to the top of your Python script.

You can also add import os if you want to access your SerpApi API key from an environment variable.

from google.cloud import bigquery

from serpapi import GoogleSearch

import osYou can find your API key here: https://serpapi.com/manage-api-key

Run the following in your terminal to set your key as an environment variable:

export SERPAPI_KEY=put_your_key_here

We’ll store this in another constant in our script.

from google.cloud import bigquery

from serpapi import GoogleSearch

import os

DATASET = "put_your_dataset_here"

CLIENT = bigquery.Client()

SERPAPI_KEY = os.environ["SERPAPI_KEY"]We’ll house the Google Maps API logic in a get_restaurants_from_serpapi method that accepts a Google Maps query string and fires the query off to SerpApi.

def get_restaurants_from_serpapi(query):

params = {

"api_key": SERPAPI_KEY,

"engine": "google_maps",

"q": query, # For example "authentic italian restaurants in Austin, TX"

}

search = GoogleSearch(params)

results = search.get_dict()Assuming your query has more than 1 search result, the results object will have a local_results array containing up to 20 restaurants.

{

search_metadata": {

...

"created_at": "2025-02-18 11:19:50 UTC",

...

},

...

"local_results": [

{

"position": 1,

"title": "Fig Italian Kitchen & Bar",

"place_id": "ChIJndqjXQBLW4YR4e4Edn7JQ_8",

"data_id": "0x865b4b005da3da9d:0xff43c97e7604eee1",

"data_cid": "18393766848094334689",

"reviews_link": "https://serpapi.com/search.json?data_id=0x865b4b005da3da9d%3A0xff43c97e7604eee1&engine=google_maps_reviews&hl=en",

"photos_link": "https://serpapi.com/search.json?data_id=0x865b4b005da3da9d%3A0xff43c97e7604eee1&engine=google_maps_photos&hl=en",

"gps_coordinates": {

"latitude": 30.240704599999997,

"longitude": -97.7858874

},

"place_id_search": "https://serpapi.com/search.json?engine=google_maps&google_domain=google.com&hl=en&place_id=ChIJndqjXQBLW4YR4e4Edn7JQ_8",

"provider_id": "/g/11y6kfh2k4",

"rating": 4.9,

"reviews": 516,

...

}

...

]

...

}We’ll iterate over this array and append each restaurant to our rows_to_insert array. We’ll only select certain fields for each restaurant.

We need to do a little data transformation for created_at and updated_at since the timestamp we’ll use from the SerpApi JSON response includes “UTC” at the end of it. BigQuery won’t accept this.

Using the created_at field from the search_metadata object in the SerpApi response, we’ll remove “UTC” and save the modified timestamp to a timestamp variable.

def get_restaurants_from_serpapi(query):

params = {

"api_key": SERPAPI_KEY,

"engine": "google_maps",

"q": query,

}

search = GoogleSearch(params)

results = search.get_dict()

rows_to_insert = []

timestamp = results['search_metadata']['created_at'].replace(" UTC", "")

for local_result in results['local_results']:

rows_to_insert.append(

{

"data_id": local_result['data_id'],

"place_id": local_result['place_id'],

"name": local_result['title'],

"address": local_result['address'],

"rating": local_result['rating'],

"reviews": local_result['reviews'],

"type": local_result['type'],

"created_at": timestamp,

"updated_at": timestamp

}

)

return rows_to_insertLet’s update our main method to use SerpApi results to insert into BigQuery. We’ll use "authentic italian restaurants in Austin, TX" as our query.

def main():

restaurant_rows = get_restaurants_from_serpapi("authentic italian restaurants in Austin, TX")

insert_into_bigquery("restaurants", restaurant_rows)The script should now look like this:

from google.cloud import bigquery

from serpapi import GoogleSearch

import os

DATASET = "put_your_dataset_here"

CLIENT = bigquery.Client()

SERPAPI_KEY = os.environ["SERPAPI_KEY"]

def get_restaurants_from_serpapi(query):

params = {

"api_key": SERPAPI_KEY,

"engine": "google_maps",

"q": query,

}

search = GoogleSearch(params)

results = search.get_dict()

rows_to_insert = []

timestamp = results['search_metadata']['created_at'].replace(" UTC", "")

for local_result in results['local_results']:

rows_to_insert.append(

{

"data_id": local_result['data_id'],

"place_id": local_result['place_id'],

"name": local_result['title'],

"address": local_result['address'],

"rating": local_result['rating'],

"reviews": local_result['reviews'],

"type": local_result['type'],

"created_at": timestamp,

"updated_at": timestamp

}

)

return rows_to_insert

def insert_into_bigquery(table_name, rows_to_insert):

table = CLIENT.get_table(f'{DATASET}.{table_name}')

try:

errors = CLIENT.insert_rows_json(table, rows_to_insert)

if errors == []:

print(f'Inserted rows successfully into {table}')

else:

print(f'Errors encountered while inserting rows')

print(errors)

except Exception as e:

print(f'Exception encountered')

print(str(e))

def main():

restaurant_rows = get_restaurants_from_serpapi("authentic italian restaurants in Austin, TX")

insert_into_bigquery("restaurants", restaurant_rows)

if __name__ == "__main__":

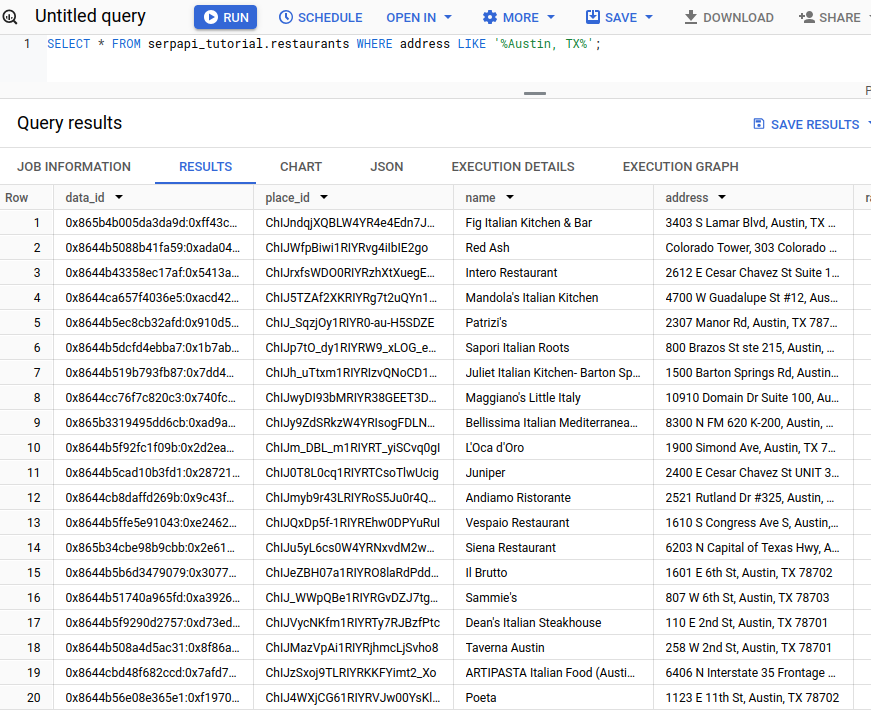

main()Try running the script and we should now have 20 Italian restaurants from Austin, TX stored in BigQuery.

SELECT * FROM serpapi_tutorial.restaurants WHERE address LIKE '%Austin, TX%';

Scraping Google Maps Reviews

With an initial set of restaurants, we can turn our attention to getting reviews.

The Google Maps Reviews API requires a data_id or place_id to look up a business. We’ll use the data_id values we scraped from the Google Maps API.

We’ll parse out these IDs from the restaurant_rows array and assign them to a data_ids variable in our main method. We’ll then pass data_ids to a get_reviews_from_serpapi method we’ll build next.

Since data_ids will contain 20 values by default and we don’t want to use so many searches while testing, I’ll only send 3 values to collect reviews for by passing data_ids[:3].

I’ve also temporarily commented out the call to insert_into_bigquery to prevent us inserting duplicate restaurants while creating the reviews logic.

def main():

restaurant_rows = get_restaurants_from_serpapi("authentic italian restaurants in Austin, TX")

# insert_into_bigquery("restaurants", restaurant_rows)

data_ids = [restaurant_row["data_id"] for restaurant_row in restaurant_rows]

review_rows = get_reviews_from_serpapi(data_ids[:3])The get_reviews_from_serpapi method will accept an array, search SerpApi’s Google Maps Reviews API with each restaurant’s data_id, and construct another rows_to_insert array. The logic is effectively the same as the get_restaurants_from_serpapi method aside from iterating over data_ids.

def get_reviews_from_serpapi(data_ids):

for data_id in data_ids:

params = {

"api_key": SERPAPI_KEY,

"engine": "google_maps_reviews",

"data_id": data_id,

}

search = GoogleSearch(params)

results = search.get_dict()You can add print(results) for each iteration to test whether you’re getting any results. You can also check your SerpApi search history to ensure the queries are hitting our API.

Now we’ll organize each review into rows we can insert into BigQuery. This requires looping over the results['reviews'] array in the API response.

Once again, we’ll manipulate the timestamp values for both created_at and iso_date to better fit BigQuery’s requirements.

We’ll also define rows_to_insert at the top of the method.

The full method should look like this:

def get_reviews_from_serpapi(data_ids):

rows_to_insert = []

for data_id in data_ids:

params = {

"api_key": SERPAPI_KEY,

"engine": "google_maps_reviews",

"data_id": data_id,

}

search = GoogleSearch(params)

results = search.get_dict()

created_at_timestamp = results['search_metadata']['created_at'].replace(" UTC", "")

for review in results['reviews']:

iso_date_timestamp = review['iso_date'].replace("T", " ").replace("Z", "")

rows_to_insert.append(

{

"data_id": results['search_parameters']['data_id'],

"review_id": review['review_id'],

"snippet": review.get('snippet', ""),

"rating": review['rating'],

"likes": review['likes'],

"iso_date": iso_date_timestamp,

"created_at": created_at_timestamp

}

)

return rows_to_insertYou can test this by dropping print(len(rows_to_insert)) just before the return. You should see 24 printed to the console. We expect 24 because we’re testing 3 restaurants and the Google Maps Reviews API returns 8 reviews on the first page.

We just need to update our main method to send these reviews to BigQuery. We can re-use the insert_into_bigquery method as before just by specifying the reviews table and providing the rows of reviews.

def main():

restaurant_rows = get_restaurants_from_serpapi("authentic italian restaurants in Austin, TX")

# insert_into_bigquery("restaurants", restaurant_rows)

rows = get_restaurants_from_bigquery()

review_rows = get_reviews_from_serpapi(rows)

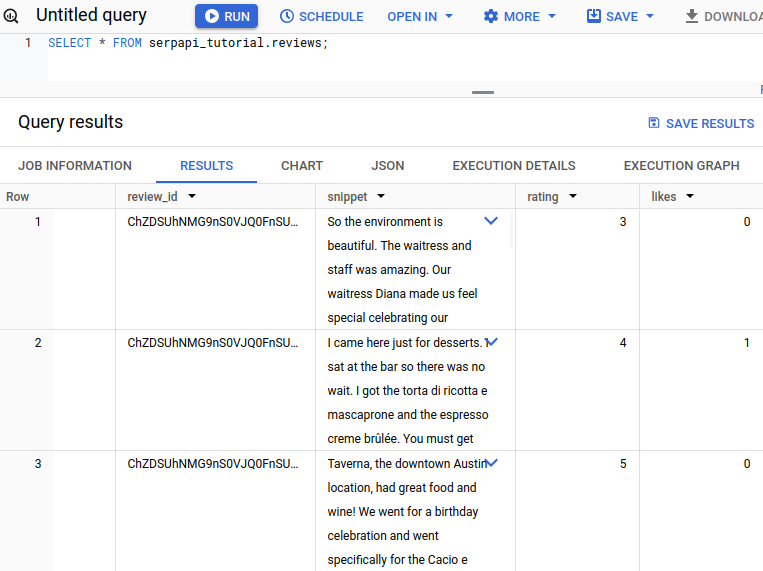

insert_into_bigquery("reviews", review_rows)Running our script, we should see Inserted successfully into your_table_id_here printed to the terminal. We should also be able to run SELECT * FROM serpapi_tutorial.reviews; and see reviews.

Finally we can uncomment the first insert_into_bigquery call and try running everything together. Let’s also update the query to search for a different city. We’ll query for Miami, FL this time.

def main():

restaurant_rows = get_restaurants_from_serpapi("authentic italian restaurants in Miami, FL")

insert_into_bigquery("restaurants", restaurant_rows)

data_ids = [restaurant_row["data_id"] for restaurant_row in restaurant_rows]

review_rows = get_reviews_from_serpapi(data_ids[:3])

insert_into_bigquery("reviews", review_rows)We should see two successful inserts logged to the terminal. We should also be able to run the following SQL and get reviews for Italian restaurants in Miami.

SELECT

res.name,

res.address,

rev.rating,

rev.snippet

FROM

serpapi_tutorial.restaurants AS res

JOIN

serpapi_tutorial.reviews AS rev

ON

res.data_id = rev.data_id

WHERE

res.address LIKE '%Miami%';

Next Steps

We’ll leave it here for now, but there’s still lots we could do. Here’s what I would tackle next.

- Add pagination: The Google Maps API will return 20 results per page. We could paginate to get more than 20 restaurants per city. Similarly, the Google Maps Reviews API will return only 8 reviews on the first page. However on subsequent pages you can request up to 20 reviews per page by adding

num=20to those requests. - Prevent duplicates: If we run the script multiple times with the same query, we’ll keep adding the same restaurants and reviews over and over. It would be better if we could identify which restaurants and reviews we’ve added. In those cases, we could update the restaurant records with the latest data and only add reviews we’ve never collected before.

Full Script

Here’s the full script for your reference.

from google.cloud import bigquery

from serpapi import GoogleSearch

import os

DATASET = "put_your_dataset_here" # for example "avid-keel-450120-i9.serpapi_tutorial"

CLIENT = bigquery.Client()

SERPAPI_KEY = os.environ["SERPAPI_KEY"]

def get_restaurants_from_serpapi(query):

params = {

"api_key": SERPAPI_KEY,

"engine": "google_maps",

"q": query,

}

search = GoogleSearch(params)

results = search.get_dict()

rows_to_insert = []

timestamp = results['search_metadata']['created_at'].replace(" UTC", "")

for local_result in results['local_results']:

rows_to_insert.append(

{

"data_id": local_result['data_id'],

"place_id": local_result['place_id'],

"name": local_result['title'],

"address": local_result['address'],

"rating": local_result['rating'],

"reviews": local_result['reviews'],

"type": local_result['type'],

"created_at": timestamp,

"updated_at": timestamp

}

)

return rows_to_insert

def get_reviews_from_serpapi(data_ids):

rows_to_insert = []

for data_id in data_ids:

params = {

"api_key": SERPAPI_KEY,

"engine": "google_maps_reviews",

"data_id": data_id,

}

search = GoogleSearch(params)

results = search.get_dict()

created_at_timestamp = results['search_metadata']['created_at'].replace(" UTC", "")

for review in results['reviews']:

iso_date_timestamp = review['iso_date'].replace("T", " ").replace("Z", "")

rows_to_insert.append(

{

"data_id": results['search_parameters']['data_id'],

"review_id": review['review_id'],

"snippet": review.get('snippet', ""),

"rating": review['rating'],

"likes": review['likes'],

"iso_date": iso_date_timestamp,

"created_at": created_at_timestamp

}

)

return rows_to_insert

def insert_into_bigquery(table_name, rows_to_insert):

table = CLIENT.get_table(f'{DATASET}.{table_name}')

try:

errors = CLIENT.insert_rows_json(table, rows_to_insert)

if errors == []:

print(f'Inserted rows successfully into {table}')

else:

print(f'Errors encountered while inserting rows ')

print(errors)

except Exception as e:

print(f'Exception encountered')

print(str(e))

def main():

restaurant_rows = get_restaurants_from_serpapi("authentic italian restaurants in Los Angeles, CA")

insert_into_bigquery("restaurants", restaurant_rows)

data_ids = [restaurant_row["data_id"] for restaurant_row in restaurant_rows]

review_rows = get_reviews_from_serpapi(data_ids[:3]) # Remove [:3] to get reviews for all possible restaurants

insert_into_bigquery("reviews", review_rows)

if __name__ == "__main__":

main()