Ten years ago, giants like Nike could pour millions into a Super Bowl ad or legacy media and call it a day. Now, companies, big or small, need to find, vet, and manage digital creators across a range of platforms – each with their own unique audience demographics, regional reach, and styles. Global celebrities and local micro-influencers alike shape today’s digital marketplace, requiring brands to master an increasingly complex social media ecosystem. With all this – how do companies handle partnerships at scale without drowning in work?

Steamship built a platform called Everpilot to meet this challenge. Their AI-powered platform autonomously discovers creators, analyzes content, and manages campaigns. But they quickly hit a common development hurdle – reliable data access at scale. When their in-house scrapers started having issues under load, they had to choose between building out a data infrastructure team or finding another path.

They’ve now turned to Apify to fuel their AI agents with real-time data. We spoke with Steamship’s founder, Ted Benson, to learn how his team built a platform that navigates the social media landscape with precision and contextual awareness.

“An important part of running a new company is being very careful about what you choose to build and what you choose to buy. If you try to build too much, you end up in a situation like fighting a fistfight with 10 people at the same time.”

–Ted Benson, Steamship

Beyond traditional AI

Backed by Andreessen Horowitz, Steamship began as an AI hosting platform before the LLM boom. Rather than building general-purpose AI assistants, they focused on automating niche workplace functions – the kinds of tasks that typically burn up 20-40 minutes of someone’s day.

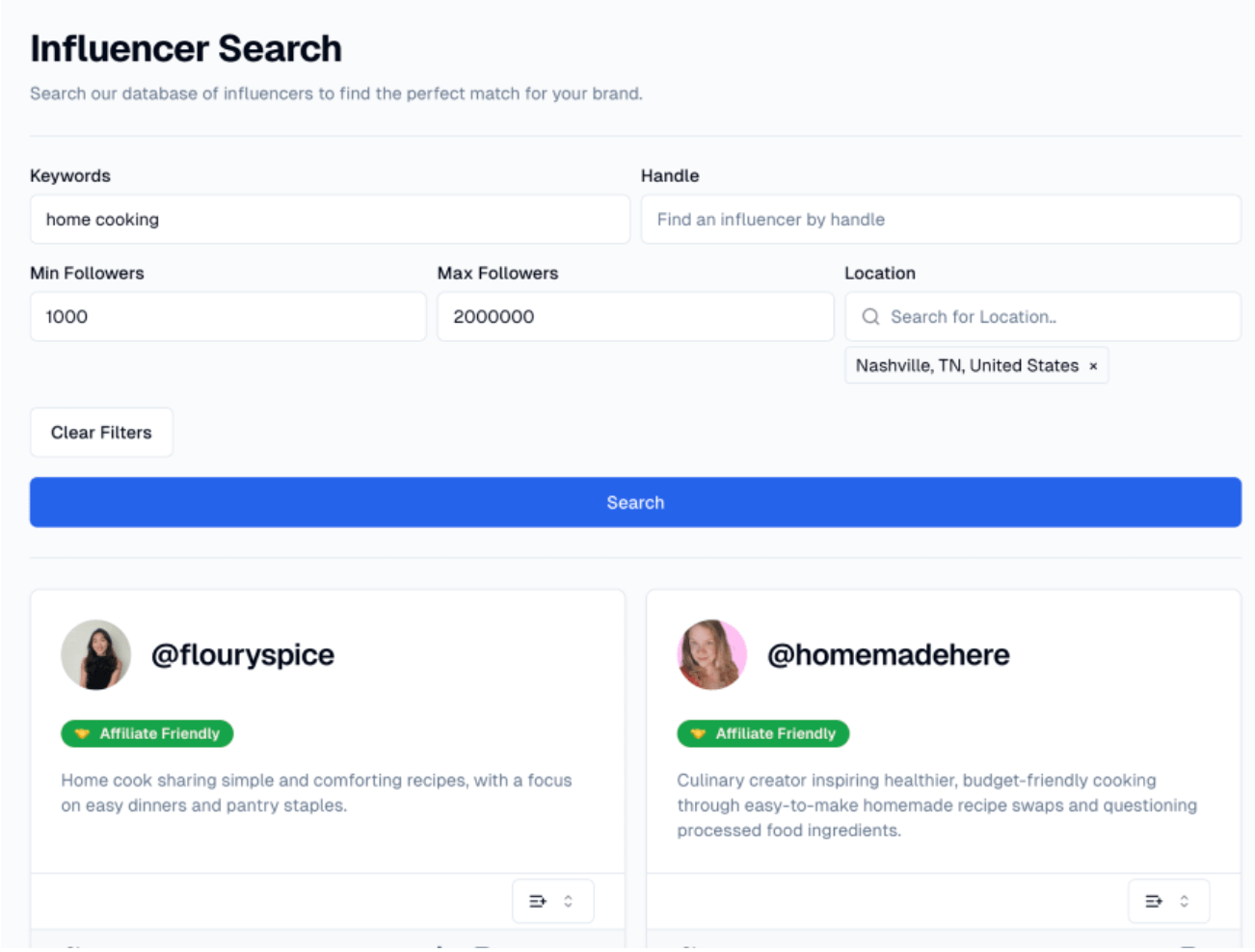

Their flagship product, Everpilot, was born when they saw marketing teams spending more hours doing influencer outreach than building strategy. Instead of managing a few major campaigns a year, these teams were now coordinating daily content drops, social takeovers, and creator collabs – all while their inboxes overflowed with new partnership requests. Finding relevant creators, vetting their content, negotiating terms, and tracking performance metrics created massive overhead. Steamship’s AI employees aim to automate this entire workflow.

“We first explored scraping ourselves and then realized the number of small problems you have to solve when trying to scrape websites at scale is so large that it’s a whole company’s worth of work.”

— Ted Benson, Steamship

Under the hood

Everpilot’s AI agents operate on a “data hunger” model, fetching data on demand for campaign decisions. They launch asynchronous cloud tasks to scan hundreds of creators on platforms like TikTok, pulling stats like followers and engagement, then thoroughly analyze shortlisted influencers’ content and audience – all autonomously, driven by real-time social media data. The system processes everything from post frequency to comment sentiment, ensuring brands get a clear, actionable picture of each creator’s fit and performance.

Their infrastructure runs on Kubernetes clusters connected to vector databases, processing data through platform-specific analysis pipelines. The entire system operates autonomously, with AI agents making decisions based on continually updated social media data. As Ted explains it, “Our AI watches TikTok, so you don’t have to.”

Scaling data

Handling complex data requirements at scale presented a significant challenge. Like many companies, Steamship initially built its own scrapers but faced the familiar hurdles of broken code from platform updates and mounting anti-scraping barriers. The solution came with Apify Actors – ready-to-use scraping tools that allowed them to eliminate the need for a dedicated data infrastructure team and redirect their engineering resources toward core AI development.

“Using Apify has helped us focus on the problem we’re trying to solve, and it’s been a home run. The infrastructure you’ve developed positions you as a unique player in this space, especially with your ability to handle the complexities of scraping and data processing.”

— Ted Benson, Steamship

Casting a wide net

Beyond the initial data collection challenges, Steamship faced complex technical hurdles in processing and maintaining its social media data pipeline. Their system needed to handle two distinct types of data gathering: broad discovery and deep analysis.

“We need to handle both broad but shallow searches, like finding 400 potential creators for a campaign, and narrow but deep scraping, like analyzing all posts from 10 creators we’ve shortlisted,” explains Benson.

Apify’s scrapers enable this dual approach, providing the raw data that Steamship’s system transforms into meaningful campaign analysis. “Your scrapers allow us to cast a wide net and then narrow it down. It’s exactly what we need for our campaigns,” says Benson. With reliable data access in place, Steamship could focus on building sophisticated analytics and attribution systems.

Is it really all about location?

One of the most complex challenges Steamship tackled was location attribution for content creators. “If they’re in Greece one day, Poland the next, San Francisco after that, and then Beijing, where are they really?” notes Benson, highlighting the complexity of tracking globally active creators.

To solve this, Steamship developed sophisticated attribution algorithms that go beyond simple geographical tags. Their system processes multiple dimensions of creator data, from physical presence patterns and content themes to cultural context and audience distribution.

Consider an edge case like a creator in Nebraska who exclusively posts about French cuisine. Traditional location-based targeting would miss the nuance of their actual influence. Steamship’s approach isn’t just about pinpointing where creators are physically located – it’s about understanding where their influence truly resonates, a calculation that requires processing multiple data points to paint an accurate picture of their market impact.

Future development

As their AI agents continue to advance, Steamship continues pushing technical boundaries. Their development roadmap includes advanced cross-platform identity resolution, sophisticated content analysis algorithms, and automated relationship mapping between creators. They’re also developing predictive analytics for campaign performance, aiming to forecast creator success before campaigns launch.

While they might eventually build their own data infrastructure as a cost optimization measure, they maintain focus on their core innovation: creating AI systems that make marketing more efficient with Apify.

“You guys are the perfect service for us. You’ve solved a huge problem that we basically now don’t have to think about. It just works.”

— Ted Benson, Steamship

Their experience shows how modern AI companies can accelerate development by carefully choosing which challenges to own. In this fast-paced space, focusing engineering resources on core innovation rather than infrastructure maintenance can be the difference between success and being spread too thin.

Fueling AI agents with Apify

If you’re building AI applications that need reliable social media data, try Apify’s Actors with a free account. Our Instagram and TikTok scrapers handle platform changes, anti-scraping measures, and scaling challenges automatically. With 4,000+ specialized tools, Apify helps you extract data from any online source and connect it directly to your LLM apps, RAG pipelines, or AI agents.

Sign up today and focus on what matters – building great AI products powered by dependable data.