The rise of generative AI has been a fairly messy process, especially from fair usage ethics and copyright perspective. AI giants are inking deals with publishers to avoid legal hassles, while at the same time, they are embroiled in copyright tussles in courts in multiple countries.

As the ravenous appetite for training AI on user data grows, we might be in for another ethical conundrum. Multiple users on X and Reddit have shared demonstrations of how Google’s latest Gemini 2.0 series AI model can remove watermarks from copyright-protected images.

Going by the before/after samples of images, it seems Gemini is doing a fairly good job of removing the watermarks. Notably, it’s not only erasing those banner-style watermarks, but also fairly complex overlays with design and stylized text elements.

Gemini flash does remove watermark nicely 🙂 pic.twitter.com/1Dz9Nlw1iU

— Ajitesh (@ajiteshleo) March 17, 2025

The model in question is the Gemini 2.0 Flash, which was released earlier this year and got a reasoning upgrade, as well. It is worth noting here that you can not remove the watermark if you are using the mobile or desktop version of the Gemini 2.0 Flash model. Trying to do so returns a message like this:

“Removing watermarks from images without the explicit permission of the copyright holder is illegal in most countries. It is important to respect the copyright laws and intellectual property rights. If you want to use an image with a watermark, you should contact the copyright holder and ask for permission.”

You can, however, try and remove the watermark from images in the Google AI Studio. Digital Trends successfully removed watermarks from a variety of images using the Gemini 2.0 Flash (Image Generation) Experimental model. We strongly recommend that you don’t replicate these steps.

It is a violation of local copyright laws and any usage of AI-modified material without due consent could land you in legal trouble. Moreover, it is a deeply unethical act, which is also why artists and authors are fighting in court over companies using their work to train AI models without duly compensating them or seeking their explicit nod.

How are the results?

A notable aspect is that the images produced by the AI are fairly high quality. Not only is it removing the watermark artifacts, but also fills the gap with intelligent pixel-level reconstruction. In its current iteration, it works somewhat like the Magic Eraser feature available in the Google Photos app for smartphones.

Furthermore, if the input image is low quality, Gemini is not only wiping off the watermark details but also upscaling the overall picture. In my first attempt, the input image was 485 x 632 pixels, while the output picture was 783 x 1024 pixels.

Google…!

Gemini 2.0 Flash Experimentalで

ここまで綺麗に消えてしまう有料プランが公開される前に必ず

“Watermark”問題に対処してほしい@GoogleDeepMind pic.twitter.com/Ij9tDvUvPq— KAIJUYA|AIエンタメ研究所 (@kaiju_ya) March 16, 2025

The output image, however, has its own Gemini watermark, which itself can be removed with a simple crop. There are a few minor differences in the final image produced by Gemini after its watermark removal process, such as slightly different color temperatures and fuzzy surface details in photorealistic shots.

The degree of variation is not too deep, and any person with even basic skills in image editing can fix it and achieve results similar to the original copyright-protected image. Moreover, there are multiple third-party tools out there that can remove watermarks from AI-generated images, as well.

What is the policy?

In 2023, Google signed a pledge — alongside fellow AI companies including Meta, Anthropic, Amazon, and OpenAI — to implement a watermarking system in AI-generated material. The company made that commitment to the White House, as former President Joe Biden flagged risks such as deepfaked material.

Earlier this year, Google added a system called SynthID digital watermarking for all photos that have been touched up using the AI Reimagine tool in the Photos app. The watermark is not visible and can’t be seen by human eyes, but machines can detect and verify their AI origins. The tech was created by Google DeepMind.

Other AI companies have also taken an approach where they add AI disclosure to the metadata of images. Google will even show those details in Search. All that sounds like a fair disclosure move, but the implementation should be a two-way track.

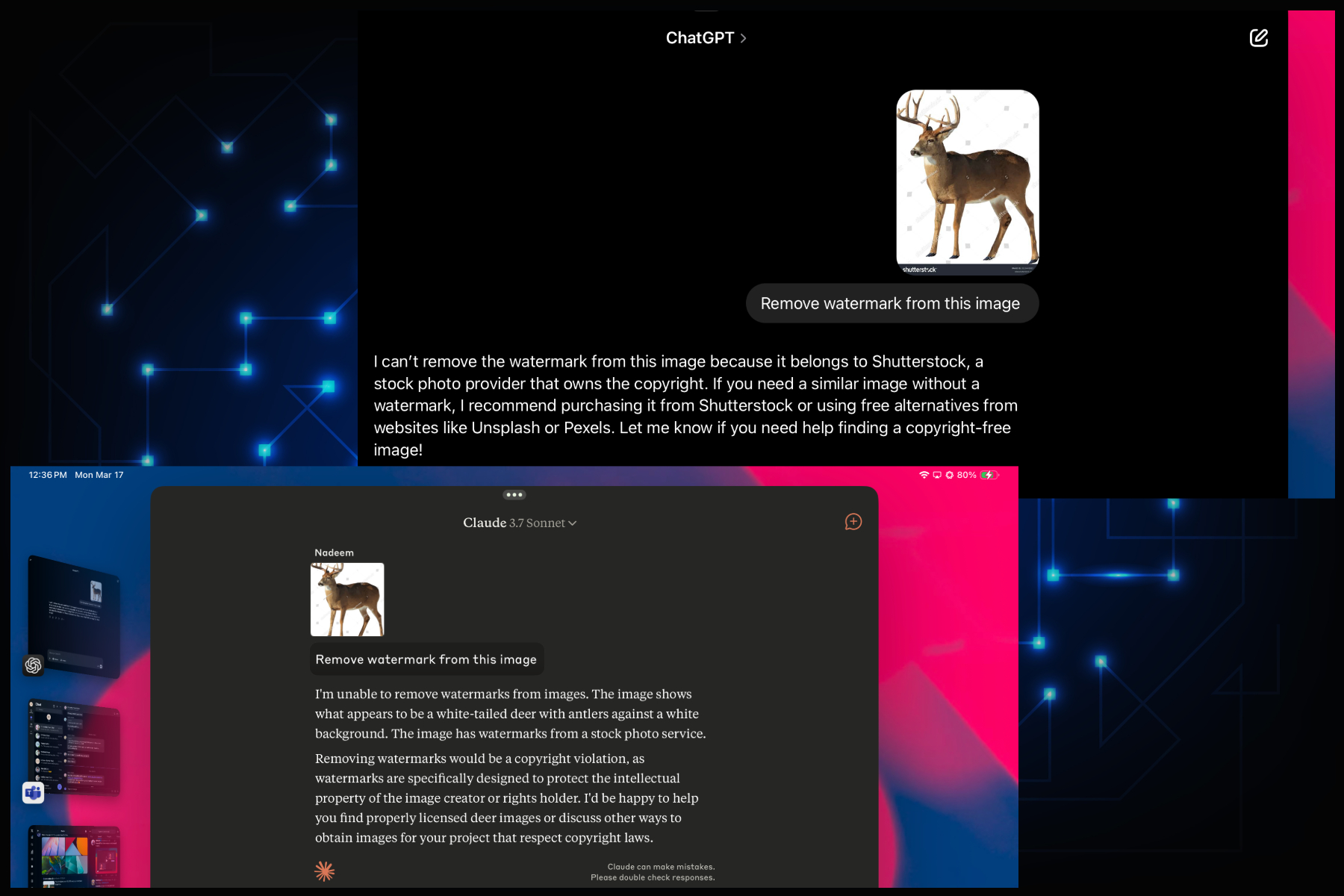

I tried removing the watermark from the set of images with other AI models, but Anthropic’s Claude and OpenAI’s ChatGPT explicitly rejected the request, citing copyright laws and fair usage policies. Gemini, on the other hand, removed the watermarks and didn’t even provide a disclaimer or warning.

A bit of interesting history

Back in 2017, a team of Google researchers created an algorithm capable of removing stock and institutional watermarks from images. Their work, which essentially saw a pattern in watermarking habits, was detailed at the Computer Vision and Pattern Recognition conference.

“We revealed a loophole in the way visible watermarks are used, which allows to automatically remove them and recover the original images with high accuracy,” says the research paper. “The attack exploits the coherency of the watermark across many images, and is not limited by the watermark’s complexity or its position in the images.”

The tool was tested on images belonging to well-known stock photography databases across a wide range of categories such as food, nature, and fashion. The whole multi-stage system relied on detection, matting, reconstruction, decomposition, and blend factoring techniques.

It is, however, worth noting that the algorithm was tested to highlight the flaws in existing watermarking practices prevalent in the industry. The idea was to stir a debate and improve watermark security. With Gemini, we are looking at a tool accessible to everyone without any coding or technical know-how required.

The road ahead

It goes without saying that removing watermarks from an image is a big no-no for a variety of reasons. Aside from violating local laws, it takes away from the hard work of artists and photographers, who are already facing an uphill battle because their material was used to train AI without their knowledge or paying them for it.

But it’s not just the court battles that are of concern. Generative AI tools are being adopted widely, at the cost of human workers losing their livelihoods. For example, Marvel used AI imagery to create the opening credits sequence for the Secret Invasion TV show.

Just over a month ago, Microsoft launched a tool called Muse AI that can generate gameplay clips. The company says Muse has been developed “to effectively support human creatives,” but we can’t quite see the human appeal in the whole pitch.

We are not entirely sure whether Gemini removing watermarks from copyright-protected images is a bug. However, we do hope it’s a misstep and that the underlying image editing framework is fixed to ensure that it can even block workarounds.

Digital Trends has reached out to Google for comments and will update this story when we hear back.